Apple's new iPhone 12 Pro added the LiDAR Scanner to its long list of innovative technology applications. Lidar technology gives the iPhone 12 Pro intense, powerful depth-sensing capabilities, resulting in better pictures and enhanced augmented reality.

The iPhone 12 Pro can take night portraits, run AR apps, and deliver all of that faster than ever, thanks to the addition of lidar technology.

What should you expect from Apple's latest and greatest technology in the iPhone 12 Pro? Let's check it out.

Lidar in the iPhone 12 Pro

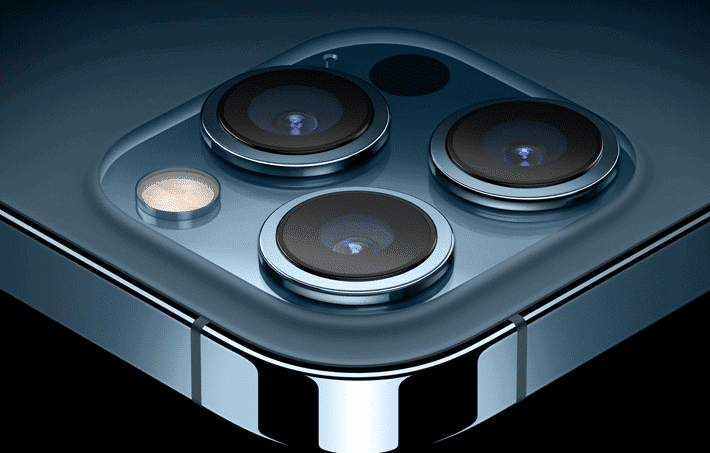

The iPhone 12 Pro and the most recent iPad Pro have something extra. You may have noticed a small black dot near the camera lenses and flash – that's the lidar sensor. If you know your Apple products, you can't miss it. This sensor might just change the game for phone cameras with its advanced depth sensing.

But more importantly, lidar technology may have the ability to take AR to the next level.

Lidar Technology and the iPhone 12 Pro's Camera

The iPhone 12 Pro's camera system gets an upgrade with the addition of lidar technology. Lidar rapidly captures a room's depth. The iPhone 12 Pro gathers that data and applies it, so users experience more realistic, better photography.

The use of lidar technology in the camera improves autofocus for photos and videos, particularly in low-light. You can expect your camera to autofocus up to six times faster with its advanced capabilities.

Check out this cool video by HiTechKing showing AR Games for iPad Pro using same Lidar technology as iPhone 12 Pro

If you have ever taken photos at night, especially in portrait mode, you know that it's probably not going to be the best quality picture. Apple boasts that portrait night mode photos are now possible with the iPhone 12 Pro's wide camera lens.

Apple also claims the iPhone 12 Pro with its A14 Bionic chip powering the camera is 50% faster than anything on the market.

How Lidar Enhances Augmented Reality in the iPhone 12 Pro

Augmented reality, or AR, combines the real, physical world with computer-generated inputs, such as audio, text, or graphics - all in real-time.

The iPhone 12 Pro's lidar gives AR a boost of speed, allowing AR apps to work efficiently. Lidar rapidly maps out a room, adding more detail than ever for a richer experience.

Developers have taken up Apple's offer to take its lidar technology and run with it. Existing AR is instantly improved using lidar, and no complicated coding is needed.

The use of occlusion, the ability to hide AR content behind physical objects, means accurate and quick room-scanning for a more immersive scene.

What Technology Has Lidar Besides the iPhone 12 Pro?

Lidar is brand-new in the world of phone cameras, but it's already in quite a bit of technology. PrimeSense developed depth-scanning tech that appeared in Microsoft's old Xbox accessory Kinect. In 2013, Apple bought PrimeSense and had since introduced lidar camera sensors and face-scanning TrueDepth.

Today lidar is popping up in self-driving cars and drones.

It's been around a while and doesn't seem to be going anywhere anytime soon.

Pros and Cons of the Lidar Depth-Sensing

Introducing lidar technology to the family of iPhones is a bold move and offers new and exciting laser-based depth-scanning technology. But is it all that great?

Let's take a look at the pros and cons of lidar technology:

Pros

Cons

Final Thought

Lidar technology is now one with Apple, as it has a prominent place next to the camera lenses and flash on the iPhone 12 Pro. Only time will tell if Apple decides it is worthy of keeping around.

For now, lidar is popping up everywhere due to its ability to provide innovative depth-scanning technology.